TL;DR

This article is part two of my article on ‘Managing the evolving landscape of data products.’ In this part, we demonstrate the challenges in versioning data products, identify inflection points, and offer insights to help you improve DP design and management for a robust and scalable Data Mesh.

For data-driven innovation, adeptly managing data product versioning, efficient cataloguing, and strategic sunsetting are crucial.

Managing Data Product (DP) Versioning

Data product versioning presents challenges that organisations must address to effectively manage their data mesh architecture. These challenges include :

- Managing version and dependency

- Ensuring data consistency

- Facilitating communication and collaboration

- Maintaining backward compatibility

- Managing older version lifecycles

It is essential to move from one state of a data product to the next as the requirements change, allowing for adaptability and meeting evolving needs within the data mesh ecosystem.

Types of DP Versioning:

- Semantic versioning (SemVer): Assign unique identifiers or labels to DP iterations or releases.

- Versioning APIs: Control and maintain backward compatibility of DP APIs through versioning.

- Schema versioning: Manage controlled changes to data schemas while ensuring compatibility.

- Event versioning: Assign unique identifiers/event types to versions of transmitted data events.

- Time-based snapshots: Capture snapshots of the DP assets at specific points in time for record-keeping.

- Data lineage and metadata management: Implement systems to track data lineage and metadata for effective versioning. (Know more about data pipelines and understand data catalouge and lineage here.)

Examples Of Dp Versioning

For (Snowflake) tables, versioning thrives on Snowflake’s inherent tools: Time Travel and Cloning.

- Time Travel enables data access at various points, forming table versions. To retrieve data at that instance, one needs to specify a timeframe or timestamp. This tracks changes, aids version comparisons, and facilitates rollbacks when required.

- Cloning generates an identical copy of an existing table at a designated moment. This distinct version aids development, testing, or analysis, safeguarding the original. Cloning serves as snapshots or branches for diverse use cases and scenarios.

In Kafka streams, DP output ports are versioned through topic versions and evolving message schemas. Each product’s output stream version corresponds to a dedicated Kafka topic, differentiated and managed by assigning version numbers/labels to them.

Message schema evolution is crucial in versioning Kafka streams. As the data product evolves and changes its output schema, it is vital to ensure backward compatibility to avoid breaking downstream consumers. Schema evolution techniques such as Avro or Protobuf that support schema evolution and compatibility rules can help.

The Kafka topic and message schema can be updated when a new DP version is released. Consumers can then choose which version matches their compatibility and requirement.

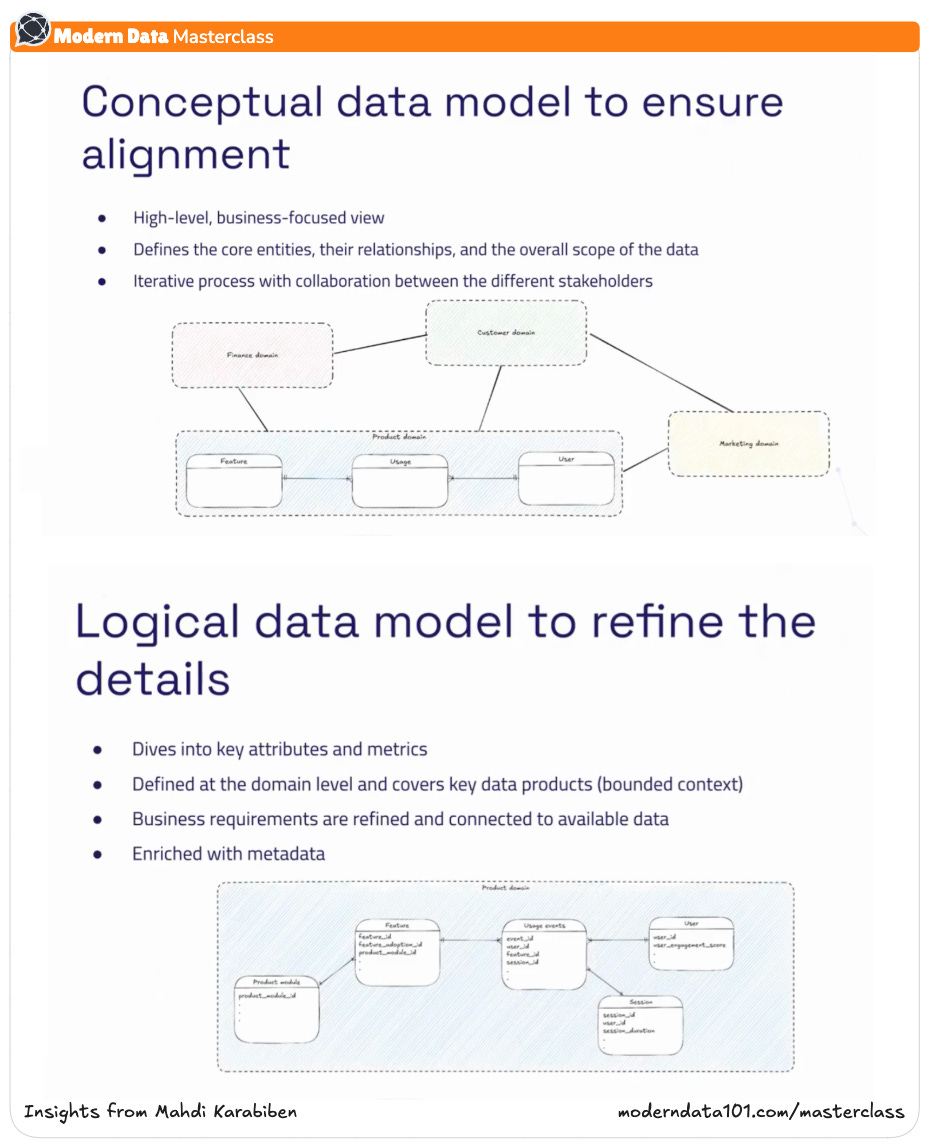

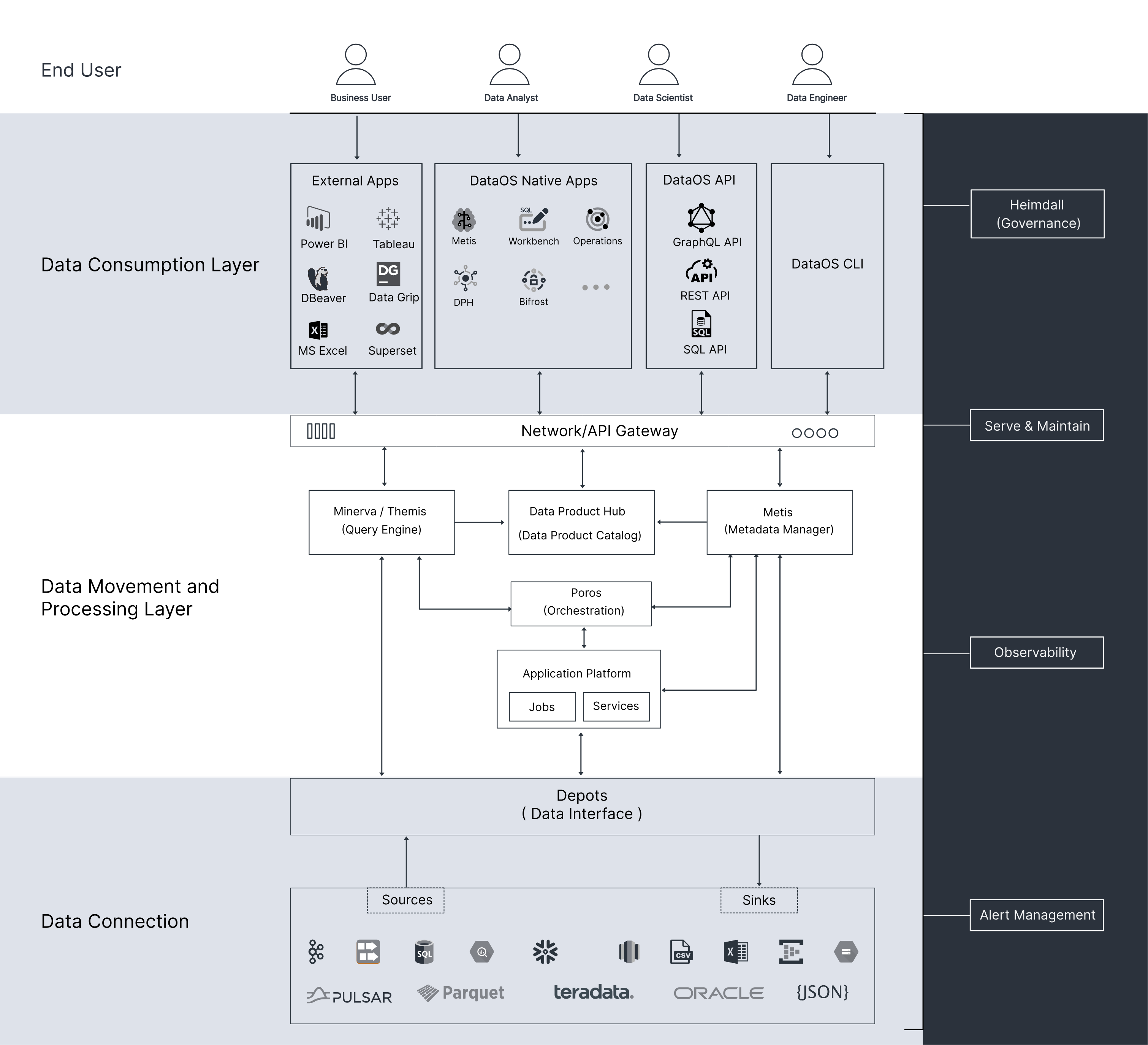

Cataloguing DP Versions

Organizations should ensure that each version of the data product is catalogued separately. As a cataloguing task, each data product version should clearly state the data sets, key attributes viz consolidation levels and business keys, the definition of DP, as well as data set elements/components, which can go as deep as highlighting the version of individual columns. The idea is to clearly state the structure and objective of each version. Each version should also define its data lineage as well. Furthermore, across data product versions, the changes should also be highlighted well about what has changed between versions so that consumers can make an informed decision.

Leverage cataloguing to enable ease of discovering data products across data mesh. This helps users identify different data products and understand the differences between various versions. The catalogue should include information about the data product’s lifecycle, including version details, changes, support duration, compatibility, and more. Include details about how to access the data and information about the datasets. Also, provide updates on Service Level Objectives (SLO) and Service Level Indicators (SLI) for each version. This approach makes it easier for users to find and use the data products effectively in a self service way.

By cataloguing datasets as distinct DP versions in one community, organisations can highlight SLI/SLO metrics per dataset. Version-level access grants offer fine-grained control over data access and permissions.

This enables:

- Tailored exploration of DP versions by users

- Self-service capabilities

- Efficient data accessibility across the organisation

Organisations can define and enforce version-level data quality standards, data lineage, and other relevant information for consistency and accuracy.

Word of caution: Since Data Product cataloguing is still quite an evolving space with limited out of the box industry solutions in the context of data mesh. Organisations might need to design some custom capabilities to optimize the data cataloging across versions for easy discovery and unambiguity.

Cataloguing tools like Collibra help manage, update, and govern data products and their versions, track their lifecycle, set deadlines for outdated or retired versions, and ensure smooth, transparent migration.

📝 Note from Editor: Learn more about catalogs and their roles in data product space here: How to Build Data Products? Deploy: Part 3/4

Sundowning Data Products

1. Inflection point: The boundary where a DP is no longer a version change but a new data product.

2. How to effectively manage the sundowning process and minimise disruption to downstream consumers.

Distinguishing between DP version change and new product emergence is crucial to Data Mesh management. It is essential to identify where the description of the data product needs significant changes to align with the dataset and use case it supports. This indicates the birth of a new data product, while the previous version can be archived/retired.

By effectively recognising these inflection points, organisations can ensure proper governance and evolution of their Data Mesh.

Factors That Help Identify Inflection Points:

- Evolving requirements: New use cases, business shifts, or added data sources may reshape a data product, even leading to a new one.

- Tailored access needs: Varying consumer demands and SLAs may signal a need to split or redefine the data product.

- Performance scaling: Rising data volume may demand performance improvements or the creation of specialised versions.

- Granularity shifts: Shifting data granularity or composition can drive new use cases, justifying a tailored, distinct data product.

- Feedback and iteration: Consumer/stakeholder feedback can unveil transformative improvements, indicating distinct DP emergence.

- Governance and ownership: Robust data governance and ownership clarify when a DP’s scope transforms enough to consider it a new entity.

With vigilant monitoring, organisations can seize opportunities for new DP creation. This ensures relevance and consumer alignment and propels data-driven objectives.

Consider The Example Of An E-Commerce Company:

The company’s domain team launched an internal data product called “Customer Behaviour Analytics” that analyses customer behaviour/preferences on the website. This DP caters to marketing and product development teams.

As the company grows and launches a mobile app, marketing seeks focused mobile behaviour insights, while product development demands finer-grained data on specific app features.

The DP “Customer Behaviour Analytics” needs significant changes to meet the new requirements. Hence, “Mobile App Customer Insights” dedicated to scrutinising app interactions and addressing specific requirements should be created.

By recognising this inflection point, the team can split the original data product into two data products tailored for unique needs.

Steps To Decommission A Data Product:

- Review and assess: Evaluate the old DP/version for usage, relevance, and impact. Determine if it is no longer needed or if alternative solutions are available.

- Notify stakeholders: Reach out to stakeholders, including data consumers, data owners, and support teams and convey your decision to decommission/archive the old DP/version, providing a timeline. (Read more on the impact of your data product strategy on different business stakeholders.)

- Data migration and backup: If the old DP/version contains valuable data, planning and executing a smooth data migration or backup process is crucial. Ensure that data integrity is maintained throughout.

- Update documentation: Revise the documentation and metadata to reflect the decommissioning or archival status of the old DP/version. Make it clear that support and active maintenance for this DP/version has ceased.

- Address dependencies: Identify dependencies on the old DP/version within the Data Mesh. Collaborate with relevant teams to update or replace these dependencies, ensuring seamless transitions for downstream consumers/DPs.

- Implement access restrictions: Revise access controls and permissions to limit access to the old DP/version. Only authorised users or those responsible for archiving the data should access decommissioned or archived components.

- Retire infrastructure: If the old DP/version used specific infrastructure/resources, systematically retire, or release them. This might involve shutting down servers, terminating databases, or freeing storage space methodically.

- Monitor and validate: Regularly oversee the impact of decommissioning/archiving the old DP/version, ensuring stability and functionality of the Data Mesh.

Conclusion

Data products are the bedrock of a Data Mesh, functioning as cohesive units. Steps and considerations for their versioning, cataloguing, and decommissioning may vary depending on the organisation and nature of the old DP.

🔑Key Takeaways:

- Effective versioning processes are essential for managing updates, facilitating collaboration, and ensuring compatibility across data products.

- Sundowning processes like retiring/archiving older DP versions prevent the accumulation of outdated components.

Author Connect

Author Connect 🖋️

Ayush Sharma

Experienced Software Engineer with a proven track record in the information technology and services industry. Proficient in Analytical Skills, Operations Management, and Java.

Modern Data 101

Experienced Software Engineer with a proven track record in the information technology and services industry. Proficient in Analytical Skills, Operations Management, and Java.

More about

Versioning, Cataloging, and Decommissioning Data Products

Checkout our

Community resources

and

Related articles