TL;DR

The data value chain has been fairly well-known over the years, especially since data was used at scale for commercial projects. The inception of the data value chain was to tackle data bias, a subset of data quality. And as we all know, even to achieve the singular objective of data quality, there are the whole nine yards of processes, tooling, and data personas involved. A data value chain strives to enable useful data with a unique combination of all three.

“Strives” would be the key word to note here because “valuable data” or the data product paradigm still seems like a distant dream, and I’m afraid it’s not just my sole opinion. I’ve had the opportunity to speak to a handful of folks from the data and analytics domain and found unanimous agreement that even data design architectures such as data meshes or data fabrics are essentially theoretical paradigms and, at the moment, lack clear implementation passages to practically enable data products quickly and at scale.

While the data value chain clearly demarcates the stages of the data journey, the problem lies in how data teams are compelled to solve each stage with a plethora of tooling and integration dependencies that isolate each stage, directly impacting ties with the business ROI.

To clearly understand how to re-engineer the data value chain and resolve persistent issues, let’s first take a look at the data value chain itself. In this piece, my objective is not to dive deep into individual stages. Instead, it is to highlight overall transformations to the framework’s foundational approach, such as, say, the unidirectional movement of data and logic.

What is the data value chain?

A data value chain is simply a framework that demarcates the journey of data from inception to consumption. There are various ways multiple vendors and analysts have grouped the data value chain.

Historically, the five below stages have prevailed:

- Data collection: This is where data is collected from heterogenous sources, be it from the customer’s S3 buckets, a bunch of folders with excel files, MySQL servers, etc. The raw data collected is varied due to the vast number of sources it's channelled from. This stage is critical due to data sensitivity, security issues, latency and throughput expectations, etc.

- Data analysis: The raw data collected is pre-analysed to understand data formats, types, transformation requirements, and validity. At this stage, data engineers could also run sanity checks on the data to understand if there are any gaps in data collection. In traditional data management approaches, the data model is owned by the centralised data engineering teams who can check if the data fulfils the model’s requirements.

- Data transformation: At the data transformation stage, the raw data from heterogeneous sources is essentially wrangled to arrive at a uniform state that can be consumed by end-user applications and machines. The transformation and storage stages are interchangeable based on the ETL vs. ELT approach adopted by an organization.

- Data storage: Once the data is transformed, or once the transformation logic has been figured out, the data is lined up for storage so applications can directly consume the data from the warehouse/lake/lakehouse.

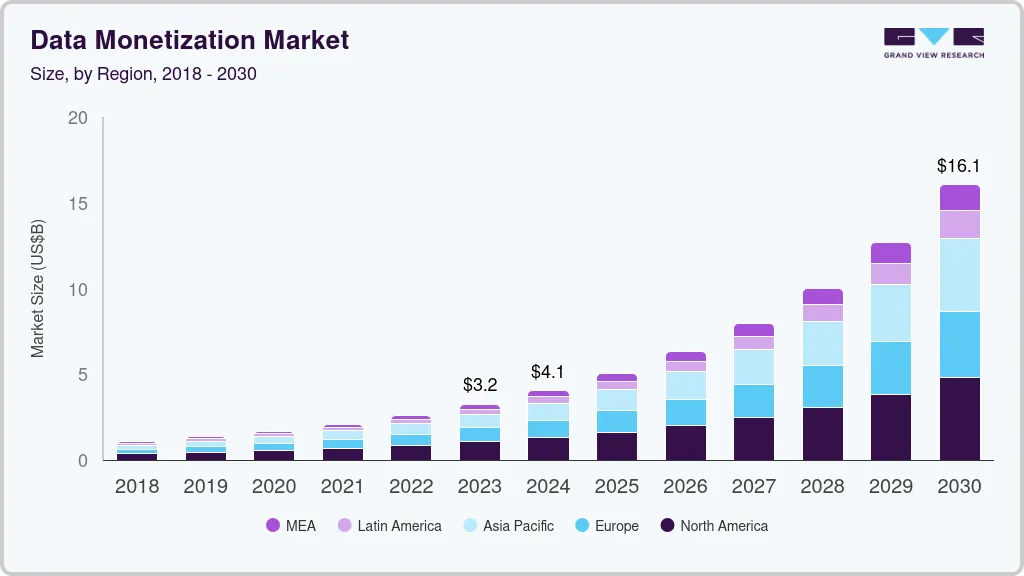

- Data monetisation: Data monetisation is simply another word for data activation. At this stage, the data is channelled into use-case-specific data applications so it can directly impact customers and business data users. In the prevalent data value chain, this is the stage where the ROI of data teams is linked and realised, while the operations in the previous stages have no clear ties or direct contact with the actual dollar value generated.

What’s missing in this existing value chain is how data monetisation is the last stage while all the stages before it is typically isolated, and there is no way for data teams or organisations to tie the value of operations in each of these stages with the final business ROI. Even though this is the regular practice across data teams and organisations, which has continued for well over half a decade or more, it is one of the largest contributors to leaking ROI.

Why is the prevalent data value chain a contributor to the seeping ROI of data teams?

The data value chain evolved over time along with the data industry to satisfy the growing demand for data-centric solutions as well as the growing volume of data generated and owned by organisations.

In hindsight, the path of evolution over the years was one of many paths that could have emerged. However, in my personal opinion, it was also one of the best natural evolutionary routes that benefited the data industry, even though the current state of the data value chain is a growing problem for organisations.

I say so considering the fact that the entire data stack was preliminary, adolescent at best. If data teams and leaders did not focus on maturing the underlying nuts and bolts, our learning as a data industry could have been stunted. Perhaps we would have never developed robust components or even understood the need for robust components in the underlying data stacks.

However, we cannot overlook the fact that in the current state of data overwhelm, the historical data value chain does more harm than good. It eats away the ROI of data teams in the following ways:

- Isolated monetisation stage

Given data teams today largely follow the maintenance-first and data-last approach due to limitations of prevalent data stacks or infrastructure, ROI or the outcome of the data-driven initiatives become an afterthought. This is the natural result of implementing processes that are not driven by the outcome-first ideology. Every stage in the prevalent data value chain is isolated with no visible or measurable ties to the business impact.

- Left-to-right approach

In prevalent data management processes, the movement of data is left to right, which means the business or domain teams barely get a say in how the data is modelled or managed. The data ownership is with the centralized data engineering team, which does not have a good understanding of the various business domains it handles data for. Therefore, at the last stage of data monetisation or activation, the businesses often realise that critical information is missing or has been incorrectly transformed, leading to multiple iterations. In other words, hundreds of tickets for data engineers and unnecessarily delayed and devalued business outcomes.

- Isolated and overwhelming tooling at each stage

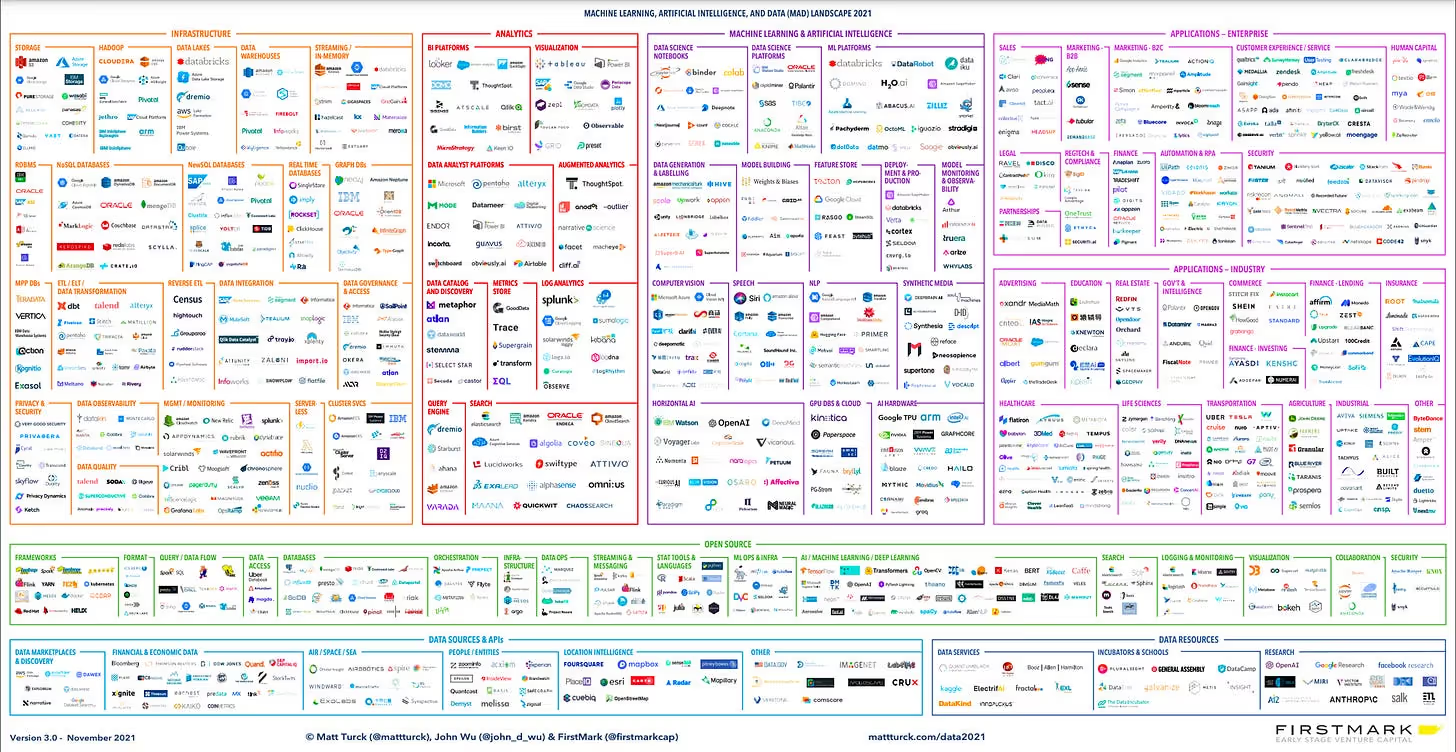

Due to the patchwork approach to solving the demerits of the traditional data stack, the modern data stack came up with a plethora of tooling that solves a very narrow patch. Over time, we have the MAD ecosystem, which is far from ideal because the ideal state is where hundreds of tools in the ecosystem are able to talk to each other without becoming an integration overhead for users. But as we have experienced, that’s never the case and such ecosystems end up with significant integration, maintenance, expertise, and cost overheads.

- Expensive data movement

In the historical value chain, data is expected to move across the stages in bulk. Every slice of the data is moved upwards without any filter to understand if they can help business applications. The storage resources and time needed in moving and storing huge data dumps are significant and, in fact, accord to one of the largest slices of investment for data teams. The outcome is not, however, in the same proportion as the investment.

Solution: Transforming the Data Value Chain to preserve and pump up the ROI of data teams

The solution is to re-engineer the data value chain and view it through a new lens made of the following five paradigms:

- Direct ties between every stage and business ROI across

The solution to narrowing down the gap between ROI and data operations is to introduce the outcome-first approach that is a consequence of becoming data-first. Feedback loops at each stage that continuously validates the process with the monetisation stage keep the impact of various processes, pipelines, and tools in check. This is established through a set of concrete feedback metrics.

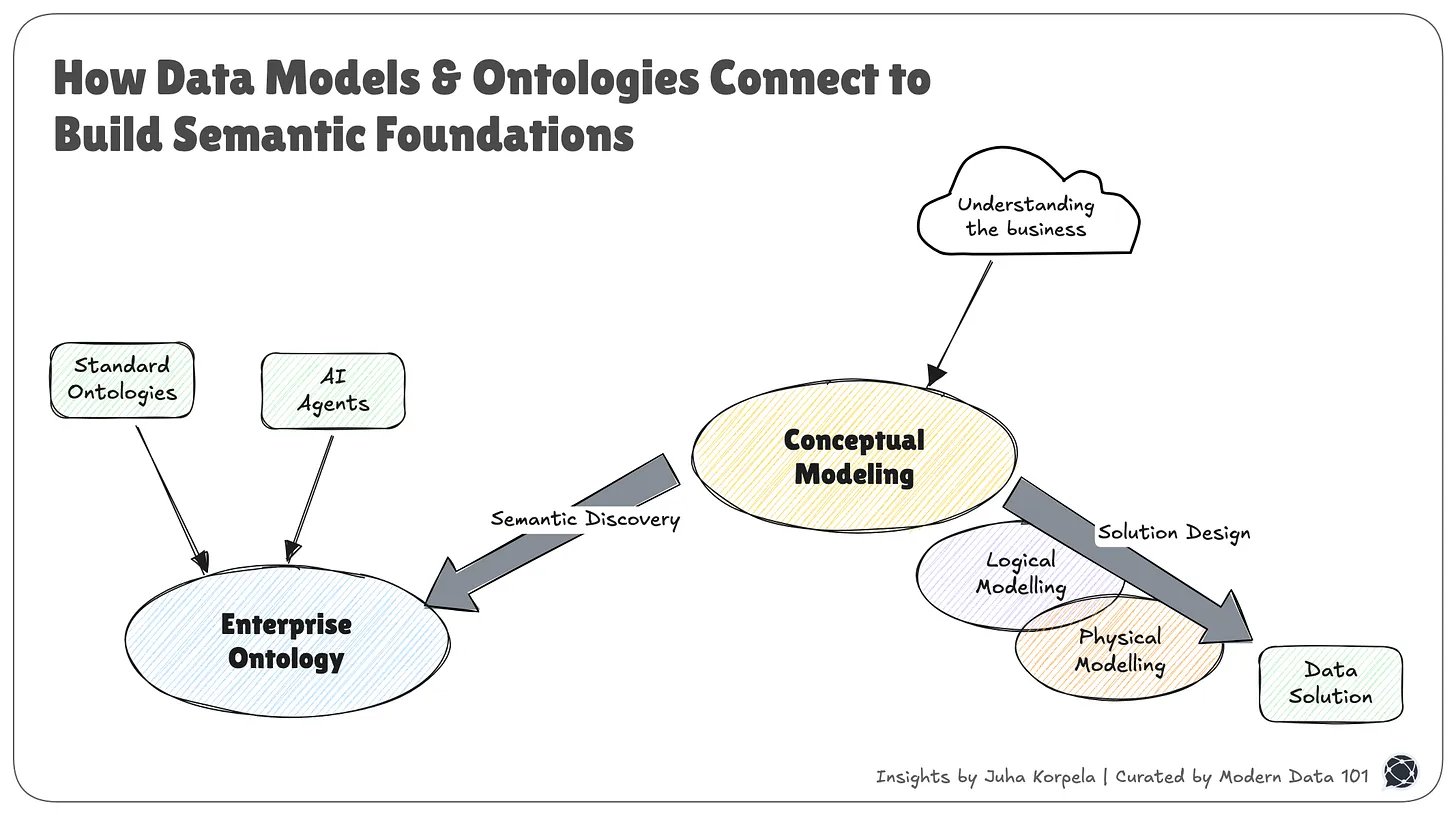

- Right-to-left data management

One of the key changes from the historical data value chain to the new framework is the transformation from passive data activation to active data activation.

Passive data activation is typically when all data available is clogged into the stages due to the left-to-right approach. On the other hand, active data activation is when the data is selectively fed into the system based on the semantic instructions coming from upstream (right-to-left flow of logic), allowing the system to only forward data that adds to the use-case-specific business model sitting near the monetisation or data activation layer.

- (continued...)

Right-to-left data management is where the business user defines the data models based on use case requirements. This holds even when the data demanded by the model does not exist or has not yet arrived in the org’s data store.

In this reverse approach, business users can further ensure that the data channelled through the data model is of high quality and well-governed through contractual handshakes. Now, the sole task of the data engineer is to map the available data to the contextually rich data model. This does not require them to struggle with or scrape domain knowledge from vague sources. Imagine a team of less frustrated data engineers and nearly happy faces!

The outcome is minimal iterations between data engineers and business users, more concrete and stable data models that adhere to business needs, a shorter path from data to insights, minimal escalations, and fast recovery. This is what essentially fulfils the data product paradigm/stage. For a detailed view of how data products are enabled, feel free to check out “Data First Stack as an enabler for Data Products.”

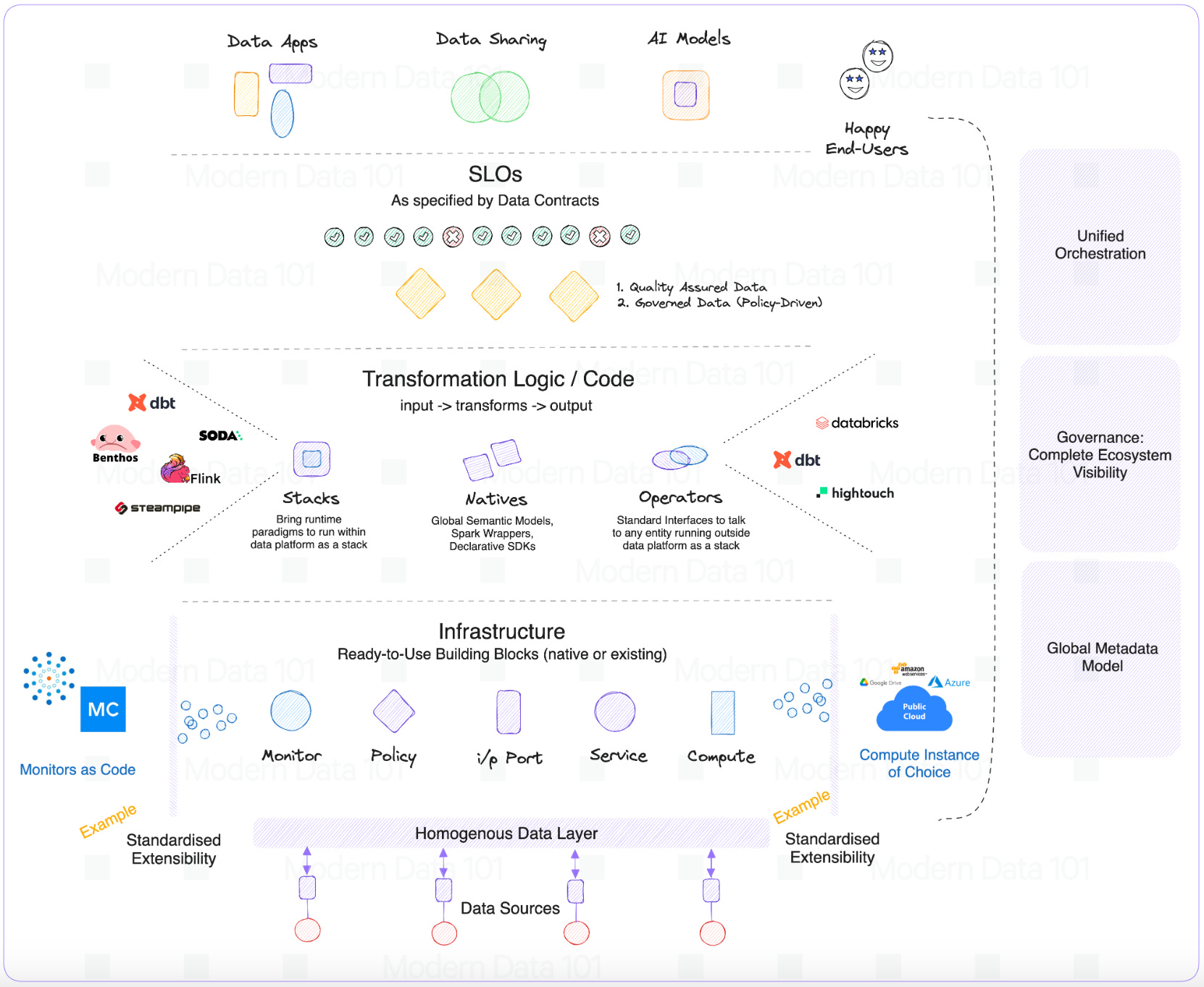

- Data Infrastructure as code for a Unified Architecture

To become data-first and maintenance-last, the infrastructure needs to be managed as code by a self-service data infrastructure to enable modular orchestration of resources and converge towards a composable unified architecture with loosely coupled and tightly integrated building blocks. This solves the problem of isolated tooling and eliminates hundreds of overhead tasks, saving significant resource costs.

- Intelligent data movement

Intelligent data movement is a direct aftereffect of right-to-left data management. Based on the semantic model defined by business teams, only the data that serves the semantic model is activated by applications and extracted from the original sources to be brought forward across the data value chain (active data activation instead of passive data activation). So, instead of transforming and storing 100% of the data, the value chain only processes and stores, say, 2% of the data that matches the data model requirements and tallies with the data expectations defined in contracts.

The above engineering, as well as conceptual shifts, are directly facilitated through the Data First Stack (DFS), which propones a unified architecture that is declaratively manageable with state-of-the-art developer experience. The impact of DFS is visible within weeks instead of months and years, unlike legacy modifications.

Data-first, as the name suggests, is putting data and data-powered decisions first while de-prioritizing everything else either through abstractions or intelligent design architectures. It would be easier to understand this if we look at it from the opposite direction - “data-last”.

Current practices, including MDS, is an implementation of “data-last” where massive efforts, resources, and time are spent on managing, processing, and maintaining data infrastructures to enable legacy data value chain frameworks. Data and data applications are literally lost in this translation and have become the last focus points for data-centric teams, creating extremely challenging business minefields for data producers and data consumers.

Implementation pillars to Transform the Data Value Chain: Technical landscape of the Data-First Stack

Data Infrastructure as a Service (DIaaS)

The Data-First stack is synonymous with a programmable data platform which encapsulates the low-level data infrastructure and enables data developers to shift from build mode to operate mode. DFS achieves this through infrastructure-as-code capabilities to create and deploy config files or new resources and decide how to provision, deploy, and manage them. Data developers could, therefore, declaratively control data pipelines through a single workload specification or single-point management.

Data developers can quickly deploy workloads by eliminating configuration drifts and vast number of config files through standard base configurations that do not require environment-specific variables. In short, DFS enables workload-centric development where data developers simply declare workload requirements, and the data first stack takes care of provisioning resources and resolving the dependencies. The impact is instantly felt with a visible increase in deployment frequency.

Consider all the values and benefits of DevOps duplicated by the data stack simply with a single shift of approach: Managing infrastructure as code. This is in stark contrast to going the whole nine yards to ensure observability, governance, CI/CD, etc., as isolated initiatives with a bucket load of tooling with integration overheads to ultimately ensure “DataOps.” How about implementing DevOps itself?

This approach consequently results in cruft (debt) depletion since data pipelines are declaratively managed with minimal manual intervention, allowing both scale and speed (Time to ROI). Higher-order operations such as data governance, observability, and metadata management are also fulfilled by the infrastructure based on declarative requirements.

Data as a software

In prevalent data stacks, we do not just suffer from data silos, but there’s a much deeper problem. We also suffer tremendously from data code silos. DFS treats data as software by enabling the management of infrastructure as code (IaC). IaC wraps code systematically to enable object-oriented capabilities such as abstraction, encapsulation, modularity, inheritance, and polymorphism across all the components of the data stack. As an architectural quantum, the code becomes a part of the independent unit that is served as a Data Product.

DFS implements data as a software paradigm by allowing programmatic access to the entire data lifecycle. It enables management and independent provisioning of low-level components with state-of-the-art data developer experience. Data developers can customize high-level abstractions on a need basis, use lean CLI integrations they are familiar with, and overall experience software-like robustness through importable code, prompting interfaces with smart recommendations, and on-the-fly debugging capabilities. Version control, review processes, asset and artefact reusability,

Data as a product is a subset of the data-as-a-software paradigm wherein data inherently behaves like a product when managed like a software product, and the system is able to serve data products on the fly. This approach enables high clarity into downstream and upstream impacts along with the flexibility to zoom in and modify modular components.

Data-First == Artefact-first, CLI-second, GUI-last

DFS makes life easy for Data Developers by ensuring their focus is on data instead of on complex maintenance mandates. The Command Line Interface (CLI) gets the second precedence since data developers have a familiar environment in which to run experiments and develop their code. The artefact-first approach puts data at the centre of the platform and provides a flexible infrastructure with a suite of loosely coupled and tightly integrable primitives that are highly interoperable. Data developers can use DFS to create complex workflows with ease, taking advantage of its modular and scalable design.

Being artefact-first with open standards, DFS can also be used as an architectural layer on top of any existing data infrastructure and enable it to interact with heterogenous components, both native and external to DFS. Thus, organizations can integrate their existing data infrastructure with new and innovative technologies without having to completely overhaul their existing systems.

It’s a complete self-service interface for developers where they can declaratively manage resources through APIs, CLIs, and even GUIs if it strikes their fancy. The intuitive GUIs allow the developers to visualize resource allocation and streamline the resource management process. This can lead to significant time savings and enhanced productivity for the developers, as they can easily manage the resources without the need for extensive technical knowledge. GUIs also allow business users to directly integrate business logic into data models for seamless collaboration.

Central Control, Multi-Cloud Data Plane

The data-first stack is forked into a conceptual dual-plane architecture to decouple the governance and execution of data applications. This gives the flexibility to run the DFS in a hybrid environment and deploy a multi-tenant data architecture. Organizations can manage cloud storage and compute instances with a centralised control plane and run isolated projects or environments across multiple data planes.

The central control plane is typically charged with metadata, orchestration, and governance responsibilities since these components need to seep into multiple endpoints to allow holistic management. The central control plan is the data admin’s headquarters.

The data plane is charged with more data-specific activities such as ingestion, transformation, federated queries, stream processing, and declarative app config management. Users can create multiple data planes based on their requirements, such as domain-specific data planes, use-case-specific data planes, environment-specific data planes, etc.

Summary

The key transformation to the data value chain would be the inclusion of the data product paradigm. In this article, we focused on other aspects, such as the right-to-left paradigm, feedback loops or direct ties with business impact, unified composable architecture, and intelligent data movement.

We also covered how the data-first stack enables the above transformational requirements through its technical topography:

- Data Infrastructure as a Service (DIaaS)

- Data as a software

- Data-First == Artefact-first, CLI-second, GUI-last

- Central Control, Multi-Cloud Data Plane

The data product aspect is wider and requires a part 2 to dive deeper. It includes three components: Code, Infrastructure, Data & Metadata. In “Re-engineering the data value chain Part 2”, we’ll dive deeper into the data value chain and, consequently, into each of the data product components to see how it fits into the new framework. In part 2, a few more technical pillars of the Data First Stack will also surface, ones which especially help to converge data into data products.

Since its inception, ModernData101 has garnered a select group of Data Leaders and Practitioners among its readership. We’d love to welcome more experts in the field to share their story here and connect with more folks building for better. If you have a story to tell, feel free to email your title and a brief synopsis to the Editor.

Author Connect 🖋️

Animesh Kumar

Animesh Kumar is the Co-Founder and Chief Technology Officer at The Modern Data Company, where he leads the design and development of DataOS, the company’s flagship data operating system. With over two decades in data engineering and platform development, he is also the founding curator of Modern Data 101, an independent community for data leaders and practitioners, and a contributor to the Data Developer Platform (DDP) specification, shaping how the industry approaches data products and platforms.

Modern Data 101

Animesh Kumar is the Co-Founder and Chief Technology Officer at The Modern Data Company, where he leads the design and development of DataOS, the company’s flagship data operating system. With over two decades in data engineering and platform development, he is also the founding curator of Modern Data 101, an independent community for data leaders and practitioners, and a contributor to the Data Developer Platform (DDP) specification, shaping how the industry approaches data products and platforms.

More about

Re-Engineering the Data Value Chain - Part 1

Checkout our

Community resources

and

Related articles