TL;DR

TOC

Big Picture at a glance

What is the job of a data model

Understanding Data Product as a solvent for Modeling Challenges

- What is a Data Product

- Visualising Data Products on a DDP Canvas

- How Data Products resolve challenges in data modeling

Let’s not deny it; we’ve all been consumed by the aesthetic symmetry of the Data Product. If you’ve not yet come across it, you’re probably living under a rock. But don’t worry, before we get into any higher-order solutions, we’ll dive into the fundamental concepts as we always do.

On the other hand, data modeling has been an ancient warrior, enabling data team to navigate the wild jungle of increasingly dense and complex data. But if data modeling was the perfect solution,

→ Why are organisations increasingly complaining about poor ROI from their data initiatives?

→ Why are data teams under an ever-increasing burden of proving the value of their data?

→ Why are data models increasingly clogged and costlier than their outcome?

Well, the answer is we haven’t been doing data modeling right. While data modeling as a framework is ideal, the process of constructing a data model suffers from weak foundations.

It lacks consensus and transparency between business teams that own the business logic and IT teams that own the physical data. While one side restructures even mildly, the other side is thrown into chaos and left to figure out how to reflect the changes delicately enough not to break any pipeline.

In this article, we’ll briefly illustrate an overview of data modeling, data products, solutions that the data product paradigm brings in with respect to modeling, and enablers of the same.

This piece is ideal for you if you lead a data team or are in a position to influence data development in the team or organisation.

Big picture at a glance

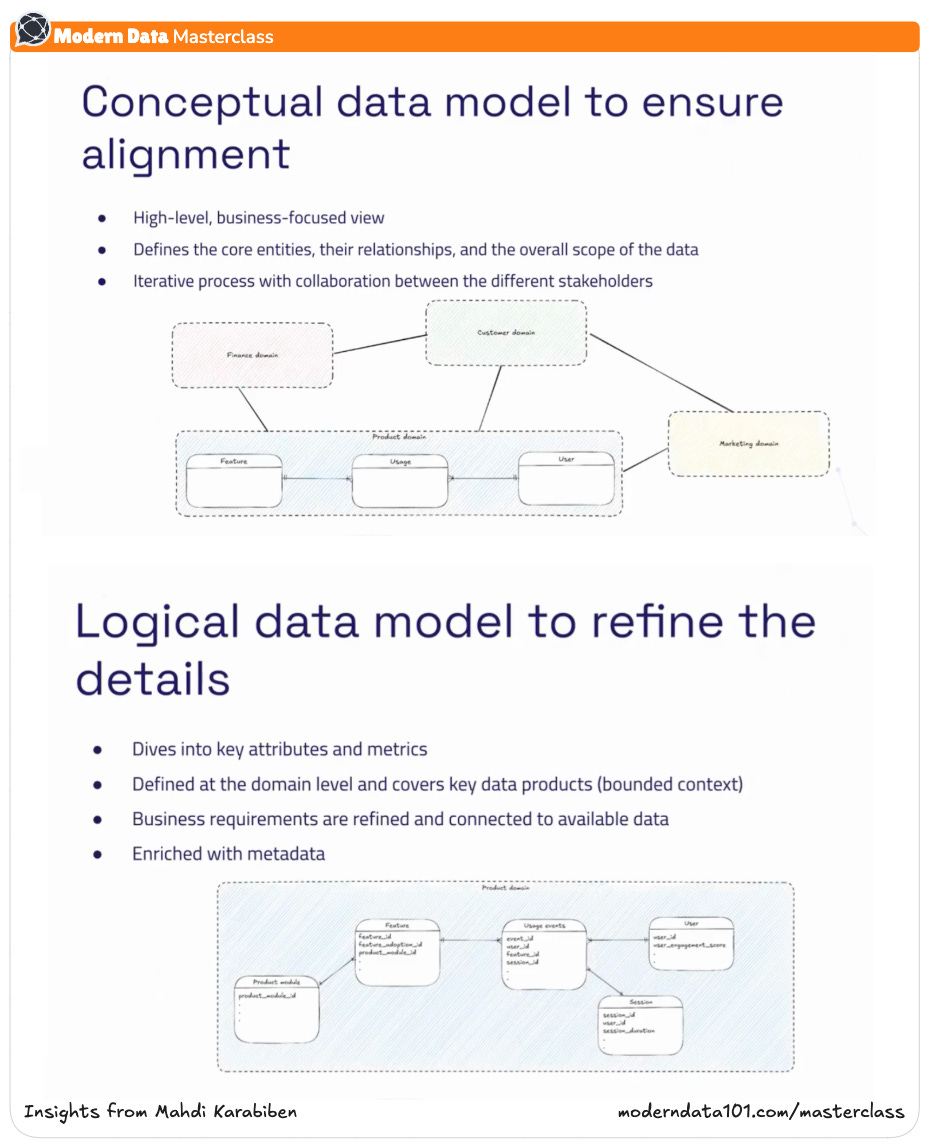

Data modeling is not the sole pillar that bears the weight of raging data. In fact, it comes way later after several pillars across the data journey. To understand the problems associated with data modeling, we must have a high-level understanding of all the laters that come before it.

Data Modeling sits almost the far end in the semantic layer, right before data gets operationalised for disparate use cases.

What is the job of the data model?

Now that we know approximately know where a data model sits in the enormous data landscape, we’ll look at the role of the data model and what it is able to achieve from its office on the semantic floor.

If you were a data model, you would essentially become a librarian. You would need to:

- Answer a wide range of queries from data consumers across disparate domains. Serve the books the reader is looking for.

- Enable data producers to produce relevant data and present them on the right shelves.

- Have a good understanding of relationships between different data entities, so you can present related information.

- Readjust shelves whenever new books come in to make space for new information in the right position.

- Maintain a set of metrics or KPIs to understand and gain insights from existing data inventory.

- Maintain meta information on library users and visitors to govern access.

- Maintain meta information on books to enable observation of tampered or damaged books.

But as we saw in the introduction, data models do not scale well over time in prevalent data stacks, given the constant to and fro with data producers and consumers. This image should summarise the push and pull and highlight the burden it imposes on the central data engineering team and the anxiety it results in for producers and consumers.

Understanding the Data Product as a Solvent for Modeling Challenges

In this spiel, we’ll get an overview of:

Understanding Data Products

Visualising Data Products on a DDP Canvas

How Data Products Resolve Challenges in Traditional Modeling

What is a Data Product

I feel the need to clearly call out what a data product is across most pieces where I mention Data Products. While it feels redundant while writing, it is essential since the phrase “data product” has been unintentionally bloated across the community.

I find that tons of brands in the data space are somehow sticking a “data product” tag to their narratives, and when you open up the product, you find little to no resemblance to data products as it was intended.

A data product is definitely not just data. It’s the data, along with the enablers for that data.

🗒️ Data Product = Data & Metadata + Code + Infrastructure

This blurb should give more clarity around each of these components and how they are associated and enabled: “Data First Stack as an Enabler for Data Products” →

Visualising Data Products on a DDP Canvas

Now that we are somewhat clear on what makes up a Data Product let’s visualise it in terms of the data landscape.

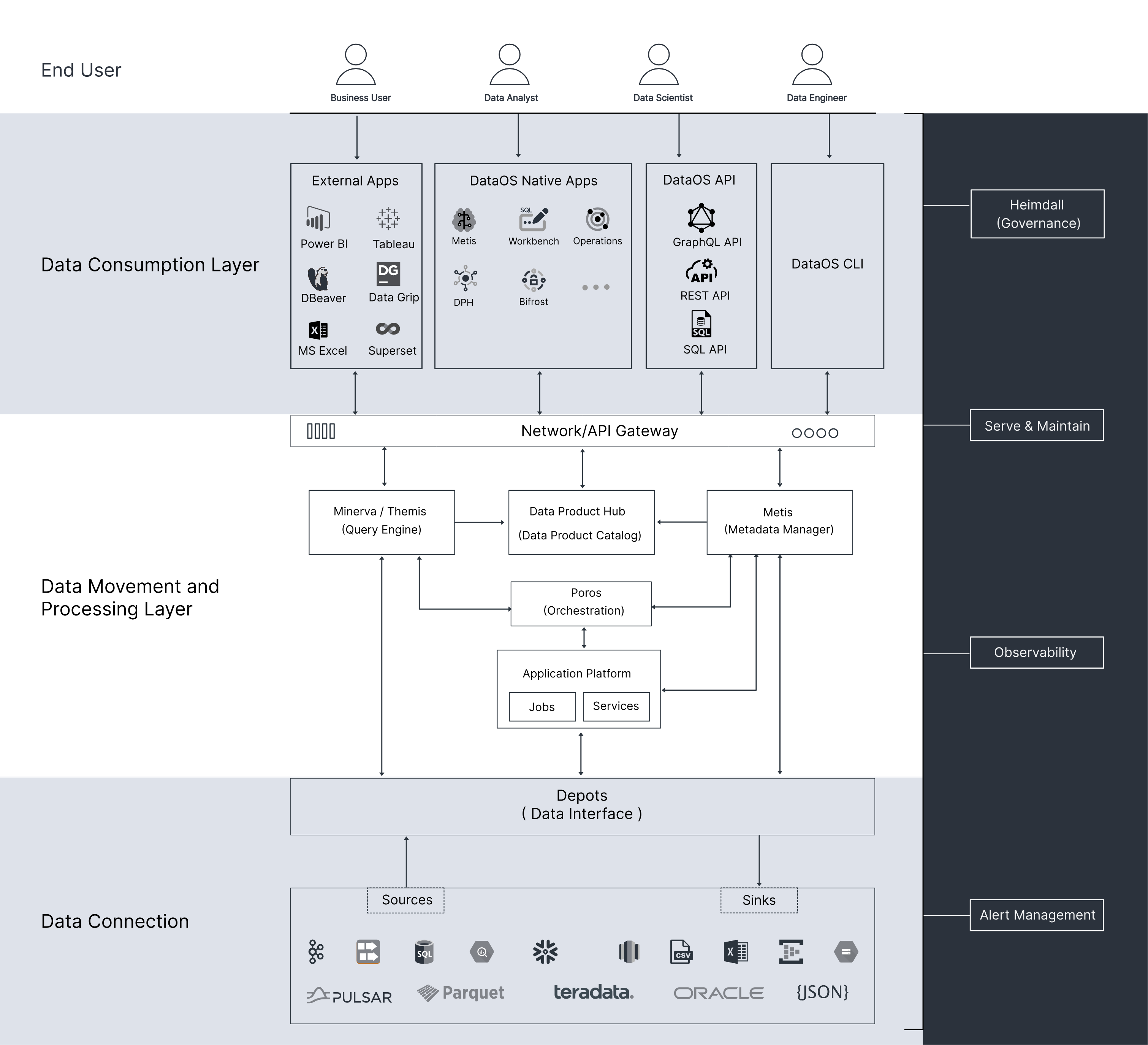

The above is a 10,000 ft. view of Data Products as enabled through the infrastructure specification of a data developer platform (DDP).

Some key observations to note here:

- Dual Planes

The central control plane has complete visibility across the data stack and is able to oversee metadata across polyglot sources and data products. The data planes, on the other hand, are isolated instances that are deployed for niche domains, specific data product initiatives, or even for particular use cases. - Infrastructure Isolation

Each data plane enables complete isolation, which can be leveraged for the data product construct. Every isolated data plane is embedded with building blocks or primitives to define customised infrastructures. Storage and Compute are examples of such atomic primitives. - Platform Orchestration

The platform orchestrator is the heart of the DDP infrastructure spec and enables single-point change management through standard configuration templates. The single config file abstracts the data developer from dependencies and complexities across multiple environments, planes, or files. This directly takes off the burden of maintenance overheads that we suffer from while integrating a basket of point solutions in the modern data stack. - Infrastructure as Code (IaC)

Infrastructure as code is the practice of building and managing infrastructure components as code, enabling programmatic access to data. Imagine the plethora of capabilities available at your fingertips by virtue of the OOP paradigm. IaC enables the higher-level construct of approaching data as software and wraps code systematically to enable object-oriented capabilities such as abstraction, encapsulation, modularity, inheritance, and polymorphism across all the components of the data stack. As an architectural quantum, the code becomes part of the independent unit that is served as a Data Product. - Local Governance

While central governance is critical to managing cross-domain and global policies, governance at the data product level is also essential to maintain local hygiene. This includes local policies that specific domains are adept with, as well as SLAs and metrics as part of the operations port of the data product. - Embedded Metadata

Every operation in the data stack is surrounded by meta information that needs to be captured and embedded with every data product vertical. If a platform orchestrator is the heart of a platform, metadata is the soul. It gives meaning to the data and consequently enriches the data product by surfacing up the context and hidden meta relations. - Disparate Output Ports

Every data product has the ability to present the same data in multiple formats to stay as close to practical use cases as possible. Every use case or project consumes data in various forms across different personas. Without the inherent ability to be flexible format-wise, there’s barely any chance for data product adoption in the real world.

How Data Products Resolve Challenges in Traditional Modeling

Before we move to the solutions, let’s have a glance at the most persisting challenges of data modeling.

Challenges of traditional modeling

- Explosion of changes leading to complex and heavy data models that are unable to answer queries that tend to be novel.

- Data governance and quality are afterthoughts arriving much later and, therefore, are unable to stick.

- Unbalanced tradeoffs in the degree of normalisation and denormalisation.

- Unending iterations between teams to update, fix, or create new data models.

- Fragile pipelines that break on any hint of data evolution, which is, of course, very frequent. when it comes to data.

- No understanding of the business landscape and requirements by the creators of the data model (central engineering teams), which explodes the above issues even further.

Challenges of relatively modern data delivery

- Given data from disparate sources is dumped as-is into data lakes, data engineers are left to fend for themselves to make the data compatible with the purpose.

- The data lake soon transforms into a data swamp and becomes the responsibility of a few heroic engineers who are the only ones familiar with the maze.

- Semantics, governance, and quality are essentially burned at the stake. If you don’t understand the swampy data anymore, how would you define rules and policies around it?

- Business barely has any visibility into the data and has massive centralised bottlenecks to operationalise data for business decisions. Atomic real-time insights are far off when even batch data is difficult to understand and process.

This article is a great resource to learn about these challenges at a glance.

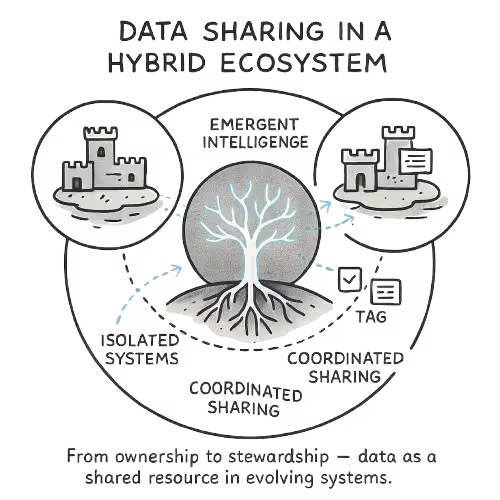

Now, let’s look at the data product landscape one more time.

Decoupled Data Modeling

This landscape proposes a logical abstraction or a semantic data model that essentially decouples the modeling layer from the physical realm of data. The Semantic Model consumes data entities fed as data products.

- This enables right-to-left engineering where the business teams can define the business landscape and the purpose of the data, freeing central engineering teams who have a partial view of the business from the burden of repeatedly fixing subpar models. They can just stick to mapping now.

- Shift ownership of the model to the business front, allowing flexibility but also bestowing accountability on business users.

- Omitting redundancy of data and expensive unnecessary migration at the physical level by enabling materialisation through logical channels that are activated on demand.

- More robust and well-defined local governance in terms of security policies, quality rules enforced through SLAs, and observability metrics for data and infra, given the expertise of the domain users.

Embedded Quality and Governance instead of Afterthoughts

It is essential to realise that not all data can be curated as data products and, in fact, shouldn’t be curated as data products. Otherwise, it would result in more data around data products than the actual data owned by the organisation. It is simply not practical.

Instead, there should only be a select few data products owned by an organisation. And this would be data that represent the core entities the business works with. In real-world scenarios, most businesses have limited operations within 10-15 key entities. It, therefore, makes sense to curate high definition product-like experiences for these select few. For example, a customer360 data product gives a well-rounded view of the customer entity.

Every such data product has inherent quality checks and governance standards that are declaratively taken care of by the data product’s underlying infrastructure once the SLAs are defined by business counterparts and mapped to the physical layer by engineering counterparts. Any data that is activated through the semantic data model is, therefore, a derivative of high-quality and well-governed data products channelling into the model.

Managing Data Evolution

Data evolution is one of the biggest challenges for stubborn data pipelines - the primary cause behind multiple breakages at the hint of slight change or disruption in data.

In the data product landscape, every data product is maintained by a specification file or a data contract that, alongside quality and governance conditions, also specifies the semantics and shape of the data. This could be represented as the ‘SLAs’ components in the represented landscape.

Every time a change, such as a difference in column type, column meaning, or name, is initiated in prevalent stacks, it would break all downstream pipelines. Whereas, in a stack embedded with contracts, the breakage or change is caught at the specification level itself, which, paired with the respective config file in a DDP, allows dynamic configuration management or single-point change across pipelines, layers, environments, and data planes.

If the change is non-desirable, the change is pushed back to the producer from the higher spec level for revision or validation.

Summary

In this article, we shared an overview of the objectives of data models, data products as a construct and the data product landscape as implemented through a DDP infra spec, and understood how it becomes an enabler for data modeling.

We discussed how abstracting the data model as a semantic construct helps to shift the ownership and accountability to business teams, thus, unburdening the central teams and omitting bottlenecks.

And in conclusion, we saw how data products as a layer before logically abstracted data models largely solve data quality, governance, evolution, and collaboration challenges that are often seen in traditional and modern data delivery approaches.

Most of the concepts in the article are largely conveyed through diagrams instead of text for ease of consumption.

Authors

Travis Thompson (Co-Author): Travis is the Chief Architect of the Data Operating System Infrastructure Specification. Over the course of 30 years in all things data and engineering, he has designed state-of-the-art architectures and solutions for top organisations, the likes of GAP, Iterative, MuleSoft, HP, and more.

Animesh is the Chief Technology Officer & Co-Founder @Modern, and a co-creator of the Data Operating System Infrastructure Specification. During his 20+ years in the data engineering space, he has architected engineering solutions for a wide range of A-Players, including NFL, GAP, Verizon, Rediff, Reliance, SGWS, Gensler, TOI, and more.

Since its inception, ModernData101 has garnered a select group of Data Leaders and Practitioners among its readership. We’d love to welcome more experts in the field to share their story here and connect with more folks building for better. If you have a story to tell, feel free to email your title and a brief synopsis to the Email Editor.

Author Connect 🖋️

Animesh Kumar

Animesh Kumar is the Co-Founder and Chief Technology Officer at The Modern Data Company, where he leads the design and development of DataOS, the company’s flagship data operating system. With over two decades in data engineering and platform development, he is also the founding curator of Modern Data 101, an independent community for data leaders and practitioners, and a contributor to the Data Developer Platform (DDP) specification, shaping how the industry approaches data products and platforms.

Travis Thompson

Animesh Kumar is the Co-Founder and Chief Technology Officer at The Modern Data Company, where he leads the design and development of DataOS, the company’s flagship data operating system. With over two decades in data engineering and platform development, he is also the founding curator of Modern Data 101, an independent community for data leaders and practitioners, and a contributor to the Data Developer Platform (DDP) specification, shaping how the industry approaches data products and platforms.

More about

Data Modeling from the POV of a Data Product Developer

Checkout our

Community resources

and

Related articles